Ranker is the people-powered source of definitive rankings

Our editors have curated tens of thousands of lists about all aspects of pop culture and beyond.

Millions of visitors shape these rankings by voting, making Ranker the ULTIMATE source for the best (and worst) of everything. We also turn the over one billion votes cast on Ranker into opinion graphs of consumer sentiment across all of entertainment and more. These are deep psychographics of what fans love at very granular levels across shows, films, celebrities, games, music and countless other categories.

1.4 Billion Votes

100 Million Voters

256,000 Rankings

Business Solutions

-

Brands & Agencies

Knowing age-groups, geographic areas, and other basic information isn’t enough — you need to know what your audience loves. Using the largest licensable set of post-consumption opinion data on the market, Ranker powers high-performance campaigns that hit your target.

-

Service Providers & OEMs

After a decade of innovation, still just 55% of consumers are happy with the content recommendations they get from video service providers*. It’s clear that metadata isn’t enough — Ranker can help you redefine your approach to discovery by focusing on what your users really like.

-

Publishers & Platforms

Plenty of other publishers have unique editorial voices, but only Ranker offers the voice of the people. License our library of highly engaging content and videos as a resource for curious users or as a draw that keeps readers coming back to your platform again and again.

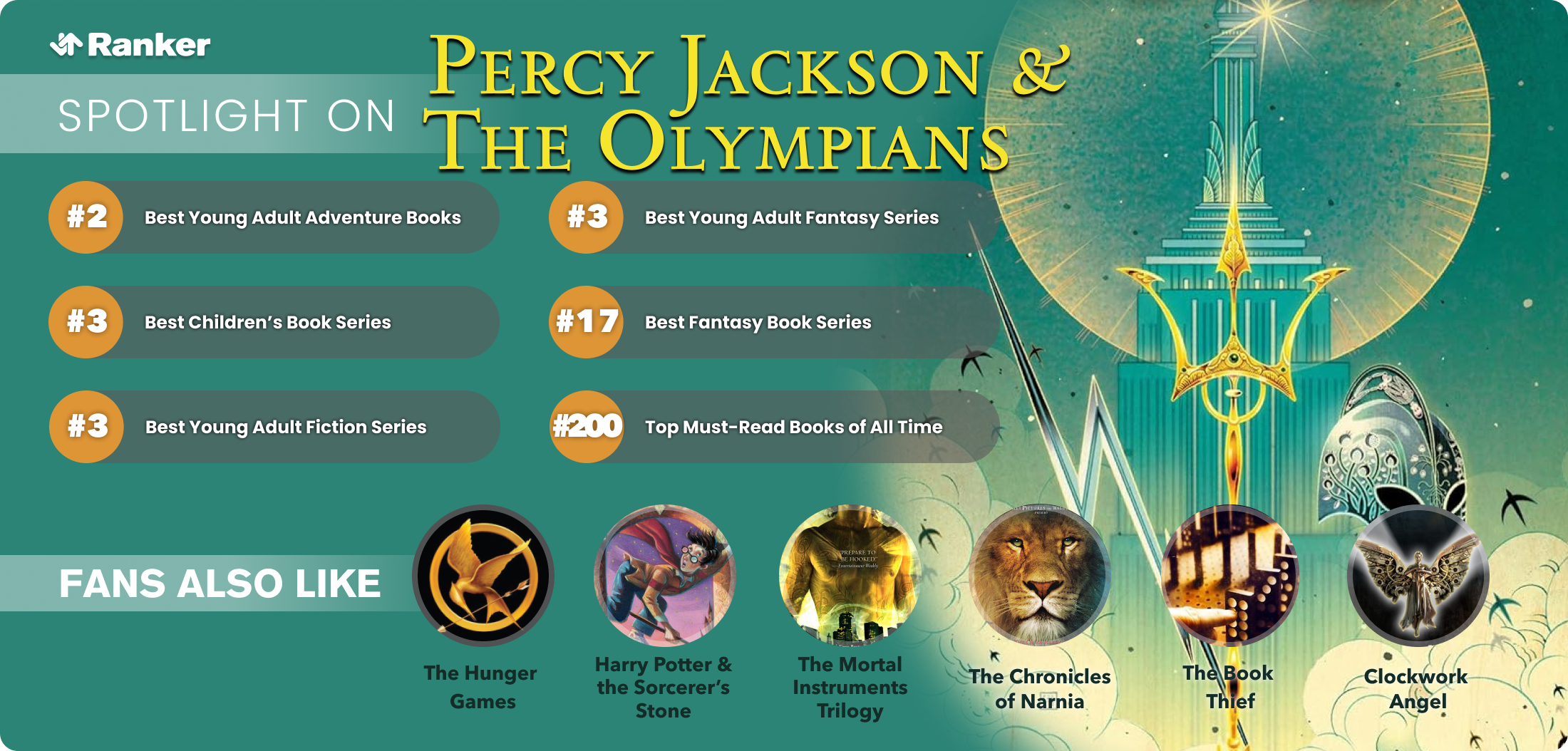

Ranker Data Stories

Pop culture fans have cast over one billion votes on Ranker. Here are the latest data stories our analysts have crafted with Ranker data.